“Our children’s apps aren’t directed at children.”

In our study of kids’ Android apps, we observed that a majority of apps specifically targeted at kids may be violating U.S. privacy law: the Children’s Online Privacy Protection Act (COPPA). In response to this revelation, many companies that we named in our paper have responded by stating that they are not covered by the law because either their apps are not directed at children or they have no knowledge that any of their users are children. As a broader issue, we have also noticed that many companies appear to turn a blind eye to COPPA compliance by stating in their privacy policies that their obviously-child-directed apps are not directed at children.

As I’ll explain in this post, these excuses are disingenuous at best and outright lies at worst: for every app that we examined, the developer took proactive steps to market their apps to children under 13, and therefore appear to be subject to COPPA because their apps are “directed” at children.

Background on COPPA

The Children’s Online Privacy Protection Act (COPPA) is one of the few, if not only, comprehensive privacy laws in the U.S. It governs the types of data that may be collected from children under 13, as well as allows parents to make decisions about this data collection. The FTC is charged with enforcing COPPA and offers very comprehensive guidance on how to comply with it.

Who must follow COPPA?

The FTC’s guidance states that COPPA covers:

…operators of commercial websites and online services (including mobile apps) directed to children under 13 that collect, use, or disclose personal information from children, and operators of general audience websites or online services with actual knowledge that they are collecting, using, or disclosing personal information from children under 13.

Thus, if a website or online service (such as a mobile app) is specifically directed at children, it must comply with COPPA. Similarly, if a website or online service is designed for general audiences (i.e., not directly targeted at children), but the operator has actual knowledge that children are amongst its user base, it too must comply with COPPA.

The FTC provides several tests to help determine whether or not a website or service should be deemed “directed at children”:

The amended Rule sets out a number of factors for determining whether a website or online service is directed to children. These include subject matter of the site or service, its visual content, the use of animated characters or child-oriented activities and incentives, music or other audio content, age of models, presence of child celebrities or celebrities who appeal to children, language or other characteristics of the website or online service, or whether advertising promoting or appearing on the website or online service is directed to children. The Rule also states that the Commission will consider competent and reliable empirical evidence regarding audience composition, as well as evidence regarding the intended audience of the site or service.

Even if the developer of a mobile app doesn’t directly market it to children, it still may be subject to COPPA if its design suggests that children are amongst its intended audience. More importantly, the FTC directly states that (emphasis mine):

If your service targets children as one of its audiences – even if children are not the primary audience – then your service is “directed to children.”

Thus, if a developer markets their app to children under 13, even if children under 13 are not the only intended audience, then the app is likely to be subject to COPPA.

Were the apps we tested covered by COPPA?

As I explain above, apps must follow COPPA if the developers either have actual knowledge that children under 13 are amongst their user base, or if those apps are directed at children. Every single app that we tested was directed at children, not just because of the marketing or appearance (or the other subjective tests), but because its developers explicitly represented, under contract, that their app is in fact directed to children by participating in Google’s Designed for Families program.

Google’s Designed for Families program

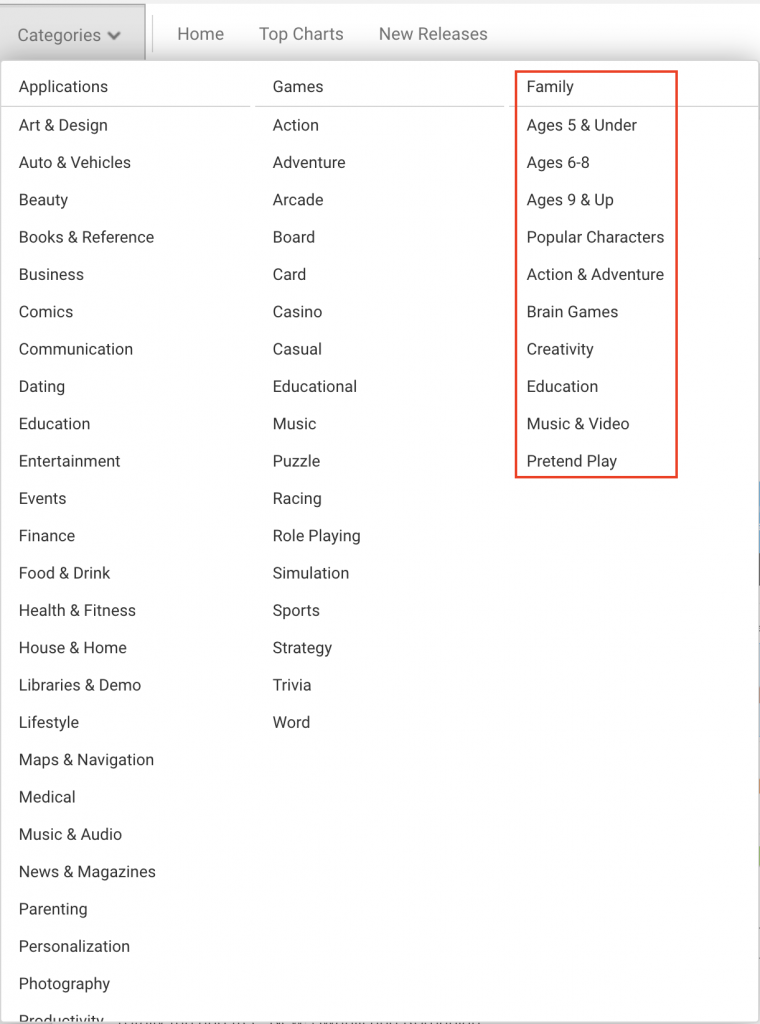

When an Android developer wishes to market their app to children, they must opt in to the “Designed for Families” (DFF) program. This allows the app to be categorized under Google Play’s “Family” category of apps:

Apps appearing in the “Family” category are in the Designed for Families (DFF) program, because the developer opted into it.

To be clear: for an app to be listed in this category, it requires proactive steps on the developer’s part. That is, every single app listed under Google Play’s “Family” category is the result of developers taking steps to market their apps to children (i.e., this isn’t the result of Google automatically categorizing the app).

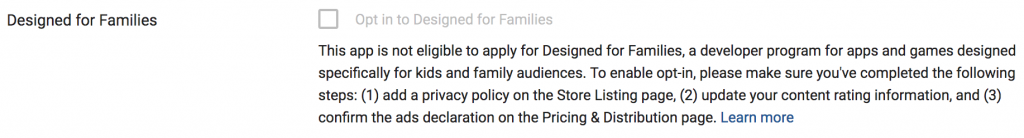

In the Android Developer’s Console, when uploading an app to Google Play, the developer opts into the program by selecting the following checkbox:

As can be seen, opting into the DFF program isn’t even a possibility by default: first the developer must add a privacy policy; indicate that their app’s content is suitable for children; and then affirm that if ads are present in the app, that no behavioral advertising is performed. Google, to their credit, provides developers with lots of documentation on COPPA compliance, as well as a list of eligibility criteria that the developer must affirm compliance with:

- Apps must be rated as ESRB Everyone or Everyone 10+, or equivalent.

- If your Designed for Families app displays ads, you confirm that:

- 2.1 You comply with applicable legal obligations relating to advertising to children.

- 2.2 Ads displayed to child audiences do not involve interest-based advertising or remarketing.

- 2.3 Ads displayed to child audiences present content that is appropriate for children.

- 2.4 Ads displayed to child audiences follow the Designed for Families ad format requirements.

- You must accurately disclose the app’s interactive elements on the content rating questionnaire, including:

- 3.1 Users can interact or exchange information

- 3.2 Shares user-provided personal information with third parties

- 3.3 Shares the user’s physical location with other users

- Apps that target child audiences may not use Google Sign-In or any other Google API Service that accesses data associated with a Google Account. This restriction includes Google Play Games Services and any other Google API Service using the OAuth technology for authentication and authorization. Apps that target both children and older audiences (mixed audience), should not require users to sign in to a Google Account, but can offer, for example, Google Sign-In or Google Play Games Services as an optional feature. In these cases, users must be able to access the application in its entirety without signing into a Google Account.

- If your app targets child audiences and uses the Android Speech API, your app’s RecognizerIntent.EXTRA_CALLING_PACKAGE must be set to its PackageName.

- You must add a link to your app’s privacy policy on your app’s store listing page.

- You represent that apps submitted to Designed for Families are compliant with COPPA (Children’s Online Privacy Protection Rule) and other relevant statutes, including any APIs that your app uses to provide the service.

- If your app uses Augmented Reality, you must include a safety warning upon launch of the app that contains the following:

- 8.1 An appropriate message about the importance of parental supervision

- 8.2 A reminder to be aware of physical hazards in the real world (e.g., be aware of your surroundings)

- Daydream apps are not eligible to participate in the Designed for Families program.

- All user-generated content (UGC) apps must be proactively moderated.

In other words:

Were the apps we examined subject to COPPA?

So far I’ve explained how apps become subject to COPPA, as well as Google Play’s DFF program. In our study, every single app that we examined came from one of two sources:

- Google Play’s DFF program, wherein the developer proactively took steps to market their app to children and represented to Google that their app is directed to children.

- Various “Safe Harbor” providers, who, for a fee, will “certify” an app as being COPPA compliant. (More on this in a subsequent blog post.)

The point is, for every single app that we examined, the developer took steps to market their app towards children: either through enrolling in Google’s DFF program, or by paying an organization to deem the app “safe” for children to use. Thus, every app that we examined should be subject to COPPA because it is clearly directed at children (even if children are only a part of a larger intended audience).

What companies are saying

After our research became public, many of the companies named in our paper have issued public statements claiming that our research is incorrect because their apps are not subject to COPPA. Now that you understand how an app becomes subject to COPPA, you can see why this is disingenuous.

Tiny Lab Productions

In this CNET article, the CEO of Tiny Lab Productions claimed that their apps are not subject to COPPA because they are not directed at children:

Fun Kid Racing alone has more than 10 million downloads, according to the app page. The app’s developers, Tiny Lab Productions, said in an email that its apps are “directed for families,” and not children, because “we see that grownups and teens plays our games.” Players are supposed to enter their birth date, and if they are under 13, the app doesn’t collect any data, said Tiny Labs Productions CEO Jonas Abromaitis.

Forgetting for a moment that this latter statement about it not collecting data from those under 13 is untrue (and that their age gate appears to not follow FTC guidelines—more on age gates in another blog post), the fact that “grownups and teens” play their games in addition to children is irrelevant. Their website makes it abundantly clear that their games are directed at children. This is from a screenshot taken on April 21, 2018, five days after the CNET article was published (as of this writing, this remains on the website):

“Racing game for kids”

“Children love it!”

“Fun Kid Racing is one of the best racing games for kids”

“…features simple controls that children love”

“The levels are designed specifically for children”

Similarly, this app appears in the Google Play Store under the DFF “Action & Adventure” category. (You can tell that a specific app is in the DFF program by mousing over the category labels; links to DFF categories are prefaced with “FAMILY_”.) That is, Tiny Lab Productions went out of its way to opt into the DFF program so that they could market the game to kids. This is a child-directed app.

Duolingo

Duolingo also participates in the DFF program, and is listed under the “Education” category. This article in Education Week acknowledges the disconnect between Duolingo’s public statements about their responsibilities and their participation in the program:

But in an emailed statement, a spokesman said “Duolingo is an online service directed at a general audience”—indicating that the company does not believe it is subject to COPPA, even though it apparently opted in to the Designed for Families section of the Google Play store.

Again, by participating in the DFF program, Duolingo is representing to Google that children are amongst its audience, and therefore the app is directed at children (even if not all its users are children).

MiniClip

Another pattern that we noticed is that many DFF apps have privacy policies that state that their developers have no knowledge of collecting data from children or that their apps are not directed at children (despite being in the DFF program!). MiniClip is one of those companies. While unlike the other companies above, they have not contacted us directly nor made public statements (beyond the claims in their privacy policy), we include them here are as an example, because they claim that their games have been downloaded over a billion times.

MiniClip makes a wide variety of apps featuring cartoon characters and other elements that likely make them subject to COPPA under the FTC’s subjective tests (i.e., “visual content, the use of animated characters or child-oriented activities and incentives, music or other audio content, age of models, presence of child celebrities or celebrities who appeal to children, language or other characteristics of the website or online service”). However, three of their apps do participate in the DFF program:

All three of these apps access location data without parental consent, and at least two of them transmit identifiers without properly securing those transmissions using TLS (i.e., HTTPS). (This by itself may violate COPPA’s requirement that personal data be transmitted using “reasonable” security measures.)

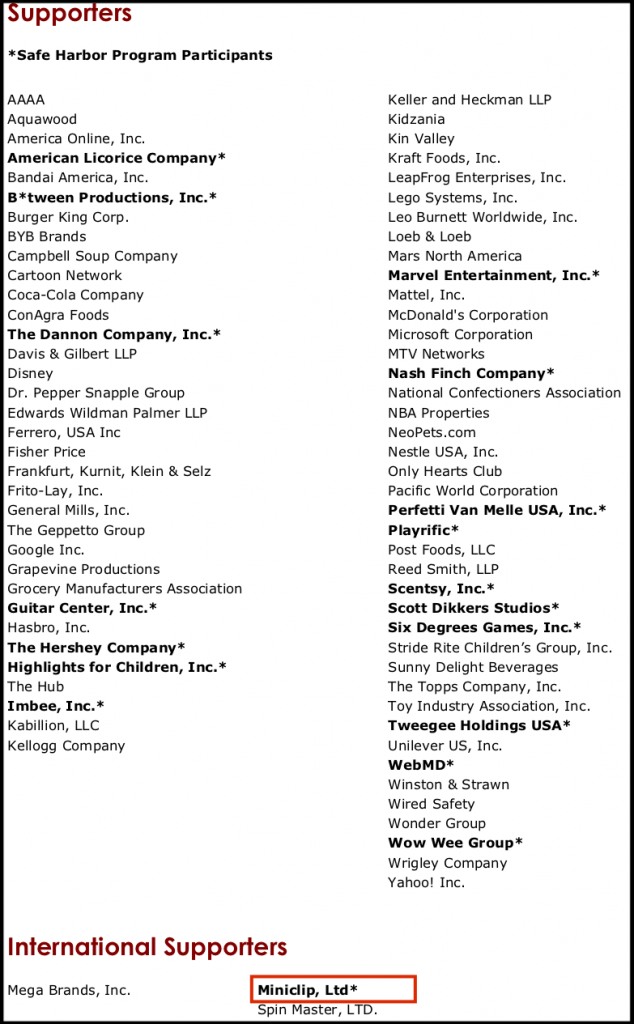

More importantly, MiniClip also appears to participate CARU’s COPPA Safe Harbor program (as of this writing):

What this means is that MiniClip paid CARU, an industry trade organization, a sum of money so that they could be declared in compliance with COPPA.

Yet, MiniClip’s privacy policy (as of this writing), which governs all three of the apps listed above, specifically states that their services “are not specifically targeted at children.”

So how can a company claim to not target children when that company (a) makes games featuring cartoons and other children’s subject matter; (b) has gone to great lengths to opt into the Designed for Families program so that they can market those games to children under 13; and (c) has paid money to certify those games as being suitable for children?

More on Safe Harbor programs in a future post.